Fill out the form below and our team will be with you in no time.

Need help?

Read mode

In this guide we break down the core principles and patterns you need so you can plan, build, and deploy reliable AI agents in production.

Why should you believe and trust us, one might ask?

Well, we've build dozens of AI agents in the past two years, and we've been learning and experimenting with multiple LLM APIs extensively.

Also, besides that, our team breaths and runs on gamification, and always try to combine and intersect these two powerful technologies.

In this article, we're gonna cover:

If you're ready, lets jump right in.

At its core, an AI agent is a system that independently accomplishes tasks on your behalf.

If conventional software or robotic process automation (RPA) streams workflows under explicit user control, an agent will:

Use a Large Language Model (LLM) to manage workflow execution and make decisions—knowing when to call tools, when a workflow is complete, and when to hand back control on errors.

Integrate with external tools (APIs, databases, legacy UIs) to gather context or take actions.

Operate within guardrails, using clear instructions and safety checks to stay on‑brand and on‑scope.

You probably have already implemented couple of automations so far. And you did it with rules and if-this-than-that, then you know, that in some cases traditional automation hits a wall.

And that is where the AI Agents come in.

Think about those gray‑area decisions—like figuring out whether a refund truly qualifies—where rule‑based systems just can’t keep up.

Or consider the nightmare of maintaining a endless set of legacy rules for security reviews that grow more brittle every time you add a new exception.

And don’t get us started on unstructured data: parsing PDF documents, teasing out meaning from free‑form text, or carrying on a back‑and‑forth conversation to handle an insurance claim.

If your workflow demands complex judgment, buckles under an ever‑expanding rulebook, or depends on messy, unstructured inputs, AI agents can cut through the fog—adapting and learning in ways static systems simply cannot.

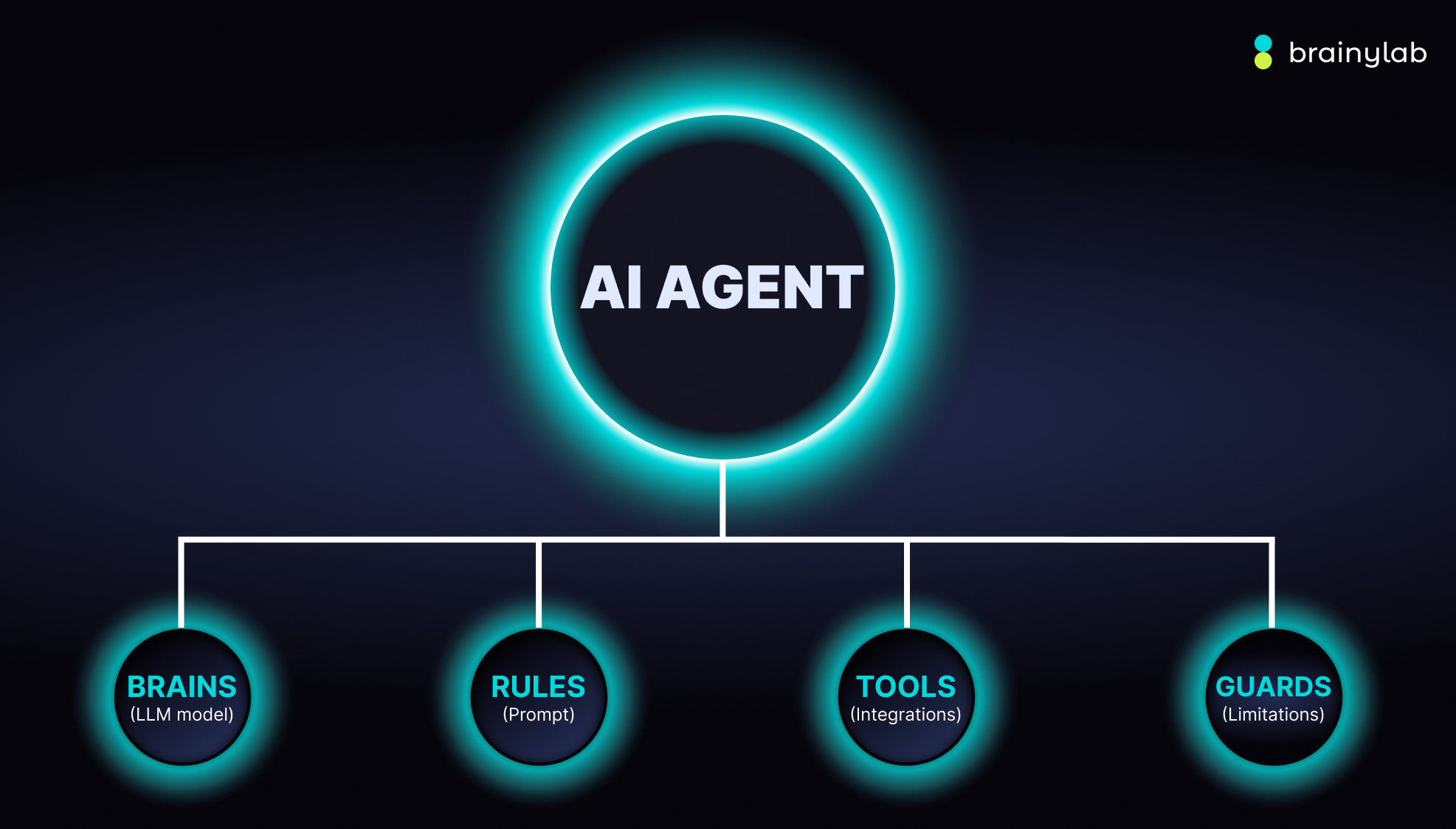

Before you even write your first line of code, you need to understand four foundational elements for any reliable AI agent.

Pick an LLM that thinks clearly for your domain. We start with the most capable model available—usually OpenAI’s latest GPT‑o4 or its realtime sibling—so we can establish a “perfect‑world” benchmark. Once flows are solid we down‑shift to slimmer, cheaper models for specific steps and measure the hit to quality.

The agent’s operating manual lives in its system prompt. We translate existing SOPs, policy docs, and call‑centre scripts into crisp, step‑by‑step instructions. Each step maps either to a tool call or a user‑facing message. Ambiguities disappear because we explicitly cover edge cases: missing data, unexpected languages, cheeky questions, you name it.

APIs, databases, legacy UIs—these are the agent’s eyes, ears, and hands. A good integration layer lets the model pull fresh context, update records, fire off emails, or raise a flag when things look fishy.

Even the smartest model will hallucinate or loop forever if you let it. We build fences:

Guardrails protect your data, your brand, and your legal team’s sleep schedule.

When it comes to models, our approach is “start with the best, and iterate down.”

We always prototype with the most capable model available to establish a performance benchmark.

Only once those core workflows run smoothly do we swap in smaller, faster (and cheaper) models for individual tasks—measuring accuracy against your targets and diagnosing where the leaner versions fall short.

This approach ensures you never cap your agent’s potential before it even has a chance to shine.

So far, we used OpenAI tools for most operations, since it offers the most advanced generation and logical models.

We saw some amazing results with GPT-o4, o4-1 mini (and even legaci 3.5 and 4, 4.5) models for creative and and fast text generations.

While Realtime API was best possible solution out there when building Voice AI agent in Slovenian (and multiple other smaller languages).

Clear, structured instructions (your “prompt” or “system routine”) are critical to reduce errors and misunderstandings:

Use existing documentation (operating procedures, policy scripts) as the basis for LLM‑friendly routines.

Break down tasks into smaller steps, minimizing multi‑intent blocks.

Define explicit actions: map each step to a tool call or user‑facing message.

Capture edge cases: anticipate missing info or unexpected user questions, and include conditional branches.

Well‑scoped routines leave less room for misinterpretation and fewer runtime errors.

Integrations allow AI agent to have access to, can read and write information to and from those tools and apps.

They are your agent’s eyes and ears, pulling in customer records from a CRM, parsing PDF specs, or even querying the web for fresh insights.

Connections with external applications allow AI agent to update databases, fire off emails, escalate tickets, or hand tasks over to a human when a safety check trips.

Guardrails are what keep your AI agent from going rogue, hallucinating answers, or doing something weird (or worse—legally questionable).

Picture three defence layers:

We start with broad privacy and safety checks, ship, observe failures in production, then tighten specific valves. The goal is a balance: safe enough to protect the brand, loose enough to keep the user experience smooth.

Always assume the agent might misbehave!

Especially when your agent is hooked up to external APIs, you need to make sure it doesn’t flood your systems with requests or enter an infinite loop because one tool didn’t respond properly.

Guardrails here look like throttling, cooldown timers, or fallback instructions when tools fail.

Well‑designed guardrails help you manage data‑privacy risks (e.g., prompt leaks) and reputational risks (e.g., off‑brand outputs).

Once you’ve got your foundations in place, choose an orchestration pattern that matches your workflow complexity:

A single agent loops through instructions, invoking tools and guardrails until an exit condition (e.g., final output, max turns, or error) is met.When to use: Workflows where one central agent can handle the entire process without losing control or context.

Workflows are distributed across specialized agents. Two common sub‑patterns:

Declarative graphs require defining every node (agent) and edge (call or handoff) upfront in a domain‑specific graph. They offer visual clarity but can become unwieldy for dynamic workflows.

Code‑first approaches (like the OpenAI Agents SDK) let you express workflow logic using familiar programming constructs—loops, conditionals, function calls—without pre‑defining the entire graph. This yields more adaptable, maintainable orchestration.

AI agents open a pandoras box for a new era of workflow automation.

Systems that reason through ambiguity, orchestrate across tools, and execute multi‑step tasks with autonomy.

To build reliable agents:

With this practical framework, you’ll be well‑equipped to unlock real business value—automating not just tasks, but entire workflows with intelligence and adaptability.

So, you’re tinkering about launching an AI product our team is here to help and give guidence or development experitse.

Reach out here and lets start talking about your first AI tool.